We've used gpt-4o-mini to automatically populate the 100k+ wikibot articles. The process is described at: Section "Wikibot LLM body population". The entire generation was completed for only $3.

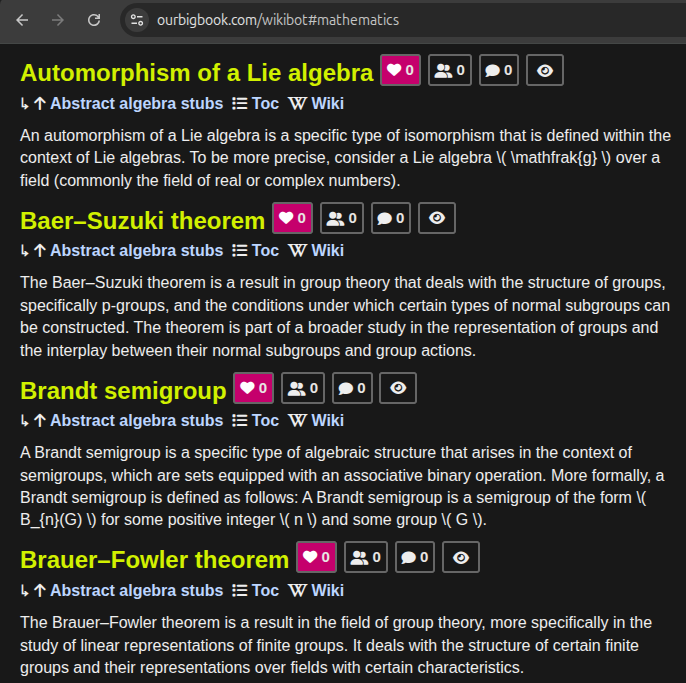

Some of the abstracts can be seen e.g. under Wikibot's user page: ourbigbook.com/wikibot#mathematics or under specific topics such as: ourbigbook.com/go/topic/qijue

We've limited the output to 100 tokens each, and for each topic X simply queried"

What is X?Unfortunately there is quite a bit of "obviously LLM generated trash" in those. Some of it we sedded out, but some stray markdown and lists with a single item cut short due to the 100 token limit remain.

This was mostly for fun, but it might sometimes serve as a good quick definition on previously empty topic pages and articles on the same topic under a given article.

Hopefully it will also kick some of our pages out of Google's "Soft 404" limbo; pages that it refuses to index because they seem empty and it considers them as being essentially 404s, inexistent pages, and bring in some tail end traffic for the more niche subjects.

Automatic topic linking is also active on these as everywhere else on the site, which ends up interlinking everything automatically.

Announced at: